Apex Triggers Introduction

What is Trigger?

Triggers are pieces of code that are automatically executed in response to events on database tables. Typically, triggers are used to maintain data integrity, enforce business rules or perform repeatable tasks.

In Salesforce, triggers are executed before or after database operation such as insert, update or delete. You can define triggers for Standard and Custom objects, and specify multiple triggers for the same objects.

There are many frameworks that could help with organizing execution of triggers such as Trigger Actions, but more on that in future articles!

Defining a Trigger

To define a trigger, you can use the following syntax:

trigger AccountTrigger on Account (before insert, after insert, before update, after update) {

// some code

new TriggerRunner(Account.sObjectType).run();

}A good practice and recommended approach is to define only one trigger per object. This is important because when there is more than one, the system cannot assure order of execution of those triggers. Sometimes, you need to break code up into separate blocks. In this case, instead of defining multiple triggers, better leverage possibilities of one of the trigger frameworks and split code into handler classes.

Limits

Triggers are a subject to governor limits, the same that are applied to the rest of the Apex code. The most important things to keep in mind are:

| Limit | Synchronous Limit | Asynchronous Limit |

|---|---|---|

| Number of SOQL queries issued | 100 | 200 |

| Number of SOQL queries issued | 50,000 | 50,000 |

| Total number of DML statements issued | 150 | 150 |

| Total stack depth for recursive Apex invocations | 16 | 16 |

| Total heap size | 6 MB | 12 MB |

This is important because during trigger transaction, records are split into chunks of 200 records, that are causing to fire trigger multiple times. It might cause problems for frequently used objects like Account or Contact when trigger exception suddenly stops us.

FATAL_ERROR|System.LimitException: Too many SQOL queries: 101In case of a Bulk API call, the request is also split into multiple chunks of 200 records, but each chunk of the same request has a new set of governor limits.

Limits Example

To test system behavior I will insert 500 accounts using Bulk API and UI, also I have defined new accoutTrigger trigger and AccountTriggerHandler handler class:

trigger accountTrigger on Account (before insert) {

new AccountTriggerHandler().beforeInsert((List<Account>) Trigger.new);

}

public with sharing class AccountTriggerHandler {

public static Integer recordCounter = 0;

public void beforeInsert(List<Account> accounts){

recordCounter += accounts.size();

List<Contact> contact = [SELECT Id FROM Contact LIMIT 5];

System.debug('Current chunk: ' + accounts.size());

System.debug('Total: ' + recordCounter);

System.debug('SOQL Query Limit: ' + Limits.getQueryRows());

}

}Let’s see the results:

- Bulk API

USER_DEBUG|[8]|DEBUG|Current chunk: 200

USER_DEBUG|[9]|DEBUG|Total: 200

USER_DEBUG|[10]|DEBUG|SOQL Query Limit: 5

USER_DEBUG|[8]|DEBUG|Current chunk: 200

USER_DEBUG|[9]|DEBUG|Total: 400

USER_DEBUG|[10]|DEBUG|SOQL Query Limit: 5

USER_DEBUG|[8]|DEBUG|Current chunk: 100

USER_DEBUG|[9]|DEBUG|Total: 500

USER_DEBUG|[10]|DEBUG|SOQL Query Limit: 5- Interface insert

USER_DEBUG|[8]|DEBUG|Current chunk: 200

USER_DEBUG|[9]|DEBUG|Total: 200

USER_DEBUG|[10]|DEBUG|SOQL Query Limit: 5

USER_DEBUG|[8]|DEBUG|Current chunk: 200

USER_DEBUG|[9]|DEBUG|Total: 400

USER_DEBUG|[10]|DEBUG|SOQL Query Limit: 10

USER_DEBUG|[8]|DEBUG|Current chunk: 100

USER_DEBUG|[9]|DEBUG|Total: 500

USER_DEBUG|[10]|DEBUG|SOQL Query Limit: 15As you can see, in both cases, my operation was split into chunks of 200 records. But when it comes to Bulk API, each time SOQL Query limit was reset. This means that, even in a situation when we have only one SOQL query as in the example (returning only 5 records each time) we could hit the limit of total number of SOQL queries issued during update of only, 20000 records!

Bulk Triggers

In the documentation, triggers are always mentioned as bulk, but what does it even mean?

As you will find out in the section below, in all trigger events we have access to one or more context variables that contain records modified in the transaction. All of those variables are either a List or a Map type. That means all the events are prepared to handle more than one record at any time. And your code should follow that principle and also use collections! In short, bulk means, there is always more than one record.

By default, all triggers are in bulk, and can process multiple records at once. That’s why it is important to use collection and expect large quantities of data. It is recommended to use Map and Set. Map is especially useful because it allows to tie records to its Ids, allowing easier navigation through datasets.

Bulk Trigger Example

❌️ Operation is being done on only one record, instead on the entirety of the request!

public class AccountTriggerHandler {

public static void afterInsert(List<Account> newAccounts) {

importantCalculation(newAccounts[0]);

}

private static void importantCalculation(Account account){

// do some important stuff

}

}✅️ We make calculations using all records from the request.

public class AccountTriggerHandler {

public static void afterInsert(List<Account> newAccounts) {

importantCalculation(newAccounts);

}

private static void importantCalculation(List<Account> accounts){

for(Account account: accounts){

// do some important stuff

}

}

}Validations in Triggers

If your logic requires more sophisticated operations than are possible by custom validations, you can build it using Apex.

For records that are not passing the validation, simply use addError() method as is shown below:

public void afterUpdate(List<Account> newAccounts){

for(Account account: accounts){

if(account.YearToYearEarnings__c < 5000){

account.addError('Earns too small amount');

}

}

}This will prevent the selected record from being saved to the database.

If you want to show the messages in the interface, use this method with Trigger.new for insert and update operations, as well as Trigger.old records for delete trigger.

Adding an error during a DML statement in Apex can cause rollback of the entire transaction if the operation is defined as all-or-none (the system is still processing all records to have full information). The same applies to Bulk API, where partial success is possible.

There are many use cases for addError() method, checkout official documentation: SObject Class.

Trigger Events

Each database operation is causing a specific trigger event to be fired. The System.Trigger class provides variables to access data based on the event.

To get access to modified records, you can use following Context variables:

| Trigger Event | Variables | Can modify data? | Can update original record? | Can delete original record? |

|---|---|---|---|---|

| before insert | Trigger.new | Yes | No | No |

| after insert | Trigger.new Trigger.newMap |

No (runtime error) | Yes | Yes |

| before update | Trigger.new Trigger.old Trigger.newMap Trigger.oldMap |

Yes | No | No |

| after update | Trigger.new Trigger.old Trigger.newMap Trigger.oldMap |

No (runtime error) | Yes | Yes |

| before delete | Trigger.old Trigger.oldMap |

No (runtime error) | Yes (updates are saved before delete) | No |

| after delete | Trigger.old Trigger.oldMap |

No (runtime error) | No | No |

| after undelete | Trigger.new Trigger.newMap |

No (runtime error) | Yes | Yes |

Trigger variables are of the SObject type, so you can cast collections to the type of interest:

List<SObject> newObjects = (List<sObject>) Trigger.new;

List<SObject> oldObjects = (List<sObject>) Trigger.old;

Map<Id, SObjects> currentObjects = (Map<Id, SObject>) Trigger.newMap;

Map<Id, SObjects> previousObjects = (Map<Id, SObject>) Trigger.oldMap;The after undelete trigger event run only on top-level objects. For example, in Account → Opportunity relation, when you delete an Account, an Opportunity can also be deleted. Then during recovery, when both objects have after undelete trigger created, only the one for Account will be fired.

Trigger Context

Triggers can be executed after or before saving a record to database, as well as the result of specific events described previously. You can use System.Trigger class to get information regarding current context of execution.

In System.Trigger class we can find following utility variables to determine current execution context:

| Variable | Usage |

|---|---|

| isExecuting | true if current logic is executed as part of a trigger call. |

| isInsert | true if trigger fired due to insert operation. |

| isUpdate | true it trigger fired due to update. |

| isDelete | true if trigger fired by delete operation |

| isBefore | true if record is not yet saved to database |

| isAfter | true if record is already saved to database |

| isUndelete | true if record is recovered from Recycle Bin |

| operationType | enum of type System.TriggerOperation to determine current operation. Possible values: BEFORE_INSERT, BEFORE_UPDATE, BEFORE_DELETE, AFTER_INSERT, AFTER_UPDATE, AFTER_DELETE, AFTER_UNDELETE |

| size | Total number of records in trigger invocation, both old and new. |

Additional Considerations

upsert fires before and after insert or before and after update, depending on if record already exists

merge fires before and after delete for losing record, and before and after update for surviving record

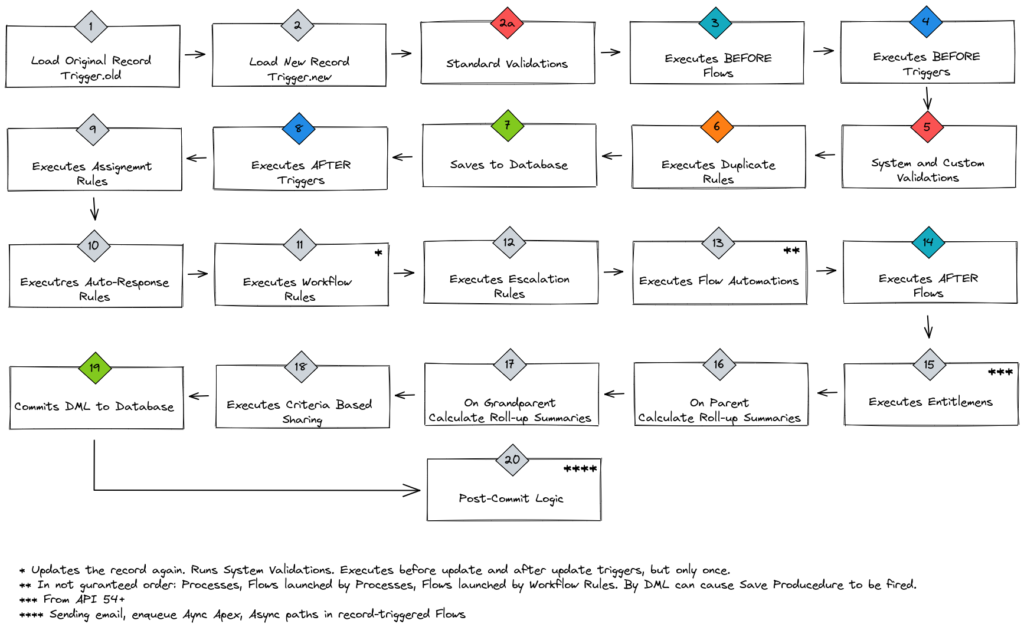

Order of Execution

During the database save operation, many items are being verified. The diagram below shows you what is happening in what order.